Conditional Generative Adversarial Networks (cGANs) are a variation of Generative Adversarial Networks (GANs) that incorporate additional conditioning information to guide the generation process. cGANs allow for more specific and controlled generation by conditioning the generator and discriminator networks on additional information, such as class labels, textual descriptions, or other modalities.

Top 10 Free Midjourney Alternatives | Free AI Image Generators

LLM Machine Learning Meaning , Uses and Pros & Cons

What is DragGAN AI Photo Editor & How to Use It ? For Beginners

you may be interested in above articles in irabrod.

What is a conditional gan ?

A Conditional Generative Adversarial Network (GAN) is a type of generative model that incorporates additional conditioning information to generate more specific and controlled outputs. In a traditional GAN, the generator network takes random noise as input and generates samples, while the discriminator network tries to distinguish between real and generated samples.

In a conditional GAN, both the generator and discriminator networks are conditioned on additional information, such as class labels, textual descriptions, or other modalities. This conditioning information is provided as input to both the generator and discriminator networks, allowing them to generate or discriminate samples based on specific conditions. The conditioning information helps guide the generation process, allowing the conditional GAN to generate samples that align with the given conditions.

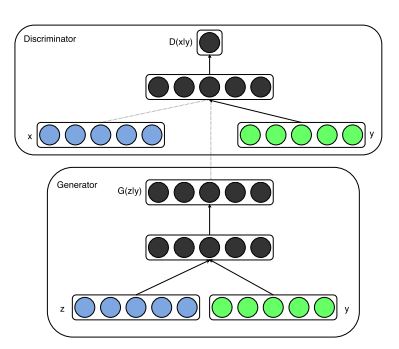

conditional gan networks architecture

For example, in the case of image generation, the conditioning information could be a class label, and the conditional GAN can generate images that belong to the specified class. During training, the generator network is trained to generate samples that fool the discriminator into classifying them as real, while the discriminator network is trained to distinguish between real samples and generated samples.

The conditioning information is used to guide the training process and ensure that the generated samples align with the specified conditions. Overall, conditional GANs provide a way to generate more specific and controlled outputs by incorporating additional conditioning information into the generative model. They have been successfully applied in various tasks such as image-to-image translation, text-to-image synthesis, and style transfer

Here is an overview of how cGANs work:

- Architecture:

- Generator: The generator network takes random noise and conditioning information as input and generates synthetic samples.

- Discriminator: The discriminator network receives both real samples from the training dataset and generated samples from the generator, along with the corresponding conditioning information. It learns to classify them as real or fake.

- Conditioning Information:

- The conditioning information can be in various forms, depending on the task. For example, in image generation, it can be class labels indicating the desired class of the generated image.

- The conditioning information is provided as input to both the generator and discriminator networks, allowing them to generate or discriminate samples based on the specified conditions.

- Training Process:

- Generator Training: The generator aims to generate samples that fool the discriminator into classifying them as real. It receives random noise and conditioning information as input and generates synthetic samples. – Discriminator Training: The discriminator receives both real samples with their corresponding conditioning information and generated samples with their conditioning information. It learns to distinguish between real and generated samples, considering the conditioning information.

- Adversarial Training: The generator and discriminator are trained iteratively in an adversarial manner. The generator tries to generate samples that align with the given conditions, while the discriminator aims to correctly classify real and generated samples, considering the conditioning information.

- Convergence: The training process continues until the generator produces samples that are indistinguishable from real samples, and the discriminator cannot reliably classify between real and generated samples, considering the conditioning information. cGANs have been successfully applied in various tasks, such as image-to-image translation, text-to-image synthesis, and style transfer.

By incorporating conditioning information, cGANs provide more control and specificity in the generated samples, allowing for targeted generation based on the given conditions.

How does generative adversarial networks work

Generative Adversarial Networks (GANs) consist of two main components: a generator network and a discriminator network. The generator network generates synthetic samples, while the discriminator network tries to distinguish between real and generated samples. The two networks are trained together in a competitive manner, hence the term “adversarial.” Here is a high-level overview of how GANs work:

- Initialization: The generator and discriminator networks are initialized with random weights.

- Training Loop:

- Generator Training: The generator takes random noise as input and generates synthetic samples. These samples are then passed to the discriminator.

- Discriminator Training: The discriminator receives both real samples from the training dataset and generated samples from the generator. It learns to classify them as real or fake.

- Adversarial Training: The generator is updated based on the feedback from the discriminator. The goal is to generate samples that can fool the discriminator into classifying them as real.

- Repeat Steps 1-3: The training loop continues for a certain number of iterations or until convergence.

- Convergence: The generator and discriminator networks are trained iteratively until they reach a point where the generator produces samples that are indistinguishable from real samples, and the discriminator cannot reliably classify between real and generated samples.

The training process of GANs can be challenging and requires careful balancing. As the generator improves, the discriminator needs to be continuously updated to keep up with the generator’s progress. This adversarial training process leads to the generator learning to generate increasingly realistic samples. Once trained, the generator can be used to generate new samples that resemble the training data distribution. GANs have been successfully applied in various domains, including image generation, text generation, and data synthesis. It’s important to note that GANs have several variations and improvements, such as conditional GANs, Wasserstein GANs, and progressive GANs, which introduce additional techniques to enhance the training stability and quality of generated samples.

Conditional vs Unconditional GAN

Conditional GANs and unconditional GANs are two variations of Generative Adversarial Networks (GANs) that differ in how they generate samples and incorporate additional information. Unconditional GANs: Unconditional GANs, also known as vanilla GANs, generate samples without any specific conditions or constraints. The generator network takes random noise as input and generates synthetic samples, while the discriminator network tries to distinguish between real and generated samples.

Unconditional GANs learn to capture the overall data distribution and generate samples that resemble the training data in a general sense. However, they lack control over the specific characteristics or attributes of the generated samples.

Conditional GANs: Conditional GANs, on the other hand, incorporate additional conditioning information to guide the generation process. The generator network takes both random noise and conditioning information as input and generates samples that align with the given conditions. The conditioning information can be in the form of class labels, textual descriptions, or other modalities.

By conditioning the generator on specific information, conditional GANs can generate samples that belong to a particular class, have specific attributes, or match certain criteria. The conditioning information is also provided to the discriminator network, which helps it distinguish between real samples and generated samples based on the given conditions. This conditioning enables more specific and controlled generation, allowing conditional GANs to generate samples that align with the desired attributes or characteristics. In summary, unconditional GANs generate samples without any specific conditions, while conditional GANs incorporate additional conditioning information to guide the generation process and generate samples that align with the given conditions. Conditional GANs provide more control and specificity in the generated samples, making them suitable for tasks such as image-to-image translation, text-to-image synthesis, and data augmentation.

How does the conditional version of generative adversarial nets differ from the original version?

The conditional version of generative adversarial nets differs from the original version by incorporating additional information, known as conditioning variables, into the generative and discriminative models.

In the original version, the generative model G captures the data distribution, and the discriminative model D estimates the probability that a sample came from the training data rather than G. However, in the conditional version, the data we wish to condition on, denoted as y, is fed to both the generator and discriminator.

This allows the conditional generative adversarial net to generate data that is conditioned on specific variables or labels, providing more control over the data generation process.

What is DragGAN AI Photo Editor & How to Use It ? For Beginners

What are the uses of conditional GAN

Conditional Generative Adversarial Networks (GANs) have several uses and applications. Here are some common uses of conditional GANs:

- Image-to-Image Translation: Conditional GANs can be used for tasks such as image-to-image translation, where the goal is to convert an input image from one domain to another. For example, converting a daytime image to a nighttime image or transforming a sketch into a realistic image.

- Text-to-Image Synthesis: Conditional GANs can generate realistic images based on textual descriptions. By conditioning the generator on text inputs, the model can generate images that match the given descriptions.

- Image Inpainting: Conditional GANs can be used for image inpainting, which involves filling in missing or corrupted parts of an image. By conditioning the generator on the available image information, the model can generate plausible and coherent completions for the missing regions.

- Style Transfer: Conditional GANs can be used for style transfer tasks, where the goal is to transfer the style of one image onto another. By conditioning the generator on the style image, the model can generate an output image that combines the content of the input image with the style of the conditioning image.

- Data Augmentation: Conditional GANs can be used for data augmentation, which involves generating synthetic data to increase the size and diversity of a training dataset. By conditioning the generator on specific attributes or labels, the model can generate new samples that belong to the desired classes or have specific characteristics.

These are just a few examples of the uses of conditional GANs. The flexibility and generative power of GANs make them applicable to various domains, including computer vision, natural language processing, and data synthesis.

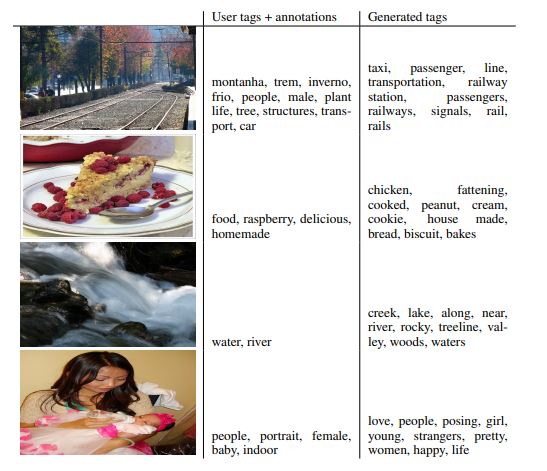

What are the potential benefits and limitations of using this approach for image tagging?

one potential benefit of using conditional generative adversarial nets for image tagging is that it can help address the issue of one-to-many mappings from input to output. In the case of image labeling, there may be many different tags that could appropriately apply to a given image, and different annotators may use different but typically synonymous or related terms to describe the same image.

By leveraging additional information from other modalities, such as natural language corpora, to learn a vector representation for labels in which geometric relations are semantically meaningful, the conditional generative adversarial net can make predictions in such spaces and benefit from the fact that when prediction errors occur, they are still often ‘close’ to the truth.

Cgans for image tagging

However, one potential limitation of this approach is that it may require a large amount of training data to learn the complex relationships between images and their corresponding tags. Additionally, the quality of the generated tags may depend on the quality of the natural language corpus used to learn the vector representation for labels. Finally, the approach may not be suitable for all types of image tagging tasks, and other approaches may be more appropriate depending on the specific task and available data.

The learning process of Conditional Generative Adversarial Networks

The learning process of Conditional Generative Adversarial Networks (cGANs) involves training both the generator and discriminator networks in an adversarial manner. Here is a step-by-step explanation of the learning process:

- Initialization: The generator and discriminator networks are initialized with random weights.

- Data Preparation: The training dataset is prepared, consisting of real samples along with their corresponding conditioning information. For example, in image generation, each real image is paired with a class label indicating its category.

- Training Loop:

- Generator Training:

- Random noise vectors and corresponding conditioning information are sampled from their respective distributions.

- The generator takes these noise vectors and conditioning information as input and generates synthetic samples.

- The generated samples are passed to the discriminator along with their corresponding conditioning information.

- The generator aims to generate samples that the discriminator classifies as real, fooling it into believing they are genuine.

- Discriminator Training: Real samples from the training dataset, along with their conditioning information, are randomly selected.

- Generated samples from the generator, along with their corresponding conditioning information, are obtained.

- The discriminator receives both real and generated samples, along with their conditioning information, and learns to classify them correctly.

- The discriminator aims to correctly distinguish between real and generated samples, considering the conditioning information.

- Adversarial Training:

- The generator and discriminator are trained iteratively in an adversarial manner. – The generator tries to improve its ability to generate samples that fool the discriminator, considering the conditioning information.

- The discriminator aims to improve its ability to correctly classify real and generated samples, considering the conditioning information.

- The generator and discriminator update their weights based on the gradients obtained from the adversarial training process.

- Generator Training:

- Convergence: The training loop continues for a certain number of iterations or until a convergence criterion is met.Convergence is typically achieved when the generator produces samples that are indistinguishable from real samples, and the discriminator cannot reliably classify between real and generated samples, considering the conditioning information.

- Evaluation and Testing:

- Once training is complete, the generator can be used to generate new samples based on the given conditioning information.

- The quality of the generated samples can be evaluated using various metrics, such as visual inspection, quantitative measures, or domain-specific evaluation criteria. It’s important to note that the learning process of cGANs can be challenging and requires careful tuning of hyperparameters, network architectures

Conditional Generative Adversarial Networks reference : Conditional Generative Adversarial Nets Mehdi Mirza , Simon Osindero